Building a voice Agentic Interface for a React Native app¶

With Alan AI SDK for React Native, you can create a voice Agentic Interface and embed it to your React Native app. The Alan AI Platform provides you with all the tools and leverages the industry’s best Automatic Speech Recognition (ASR), Spoken Language Understanding (SLU) and Speech Synthesis technologies to quickly build an Agentic Interface from scratch.

In this tutorial, we will create a simple React Native app with Alan AI voice and test drive it on the simulator. The app users will be able to tap the voice Agentic Interface button and give custom voice commands, and the Agentic Interface will reply to them.

What you will learn¶

How to add a voice interface to a React Native app

How to write simple voice commands for a React Native app

What you will need¶

To go through this tutorial, make sure the following prerequisites are met:

You have signed up to Alan AI Studio.

You have created a project in Alan AI Studio.

You have set up the React Native environment and it is functioning properly. For details, see React Native documentation.

You have installed CocoaPods to manage dependencies for an Xcode project:

Terminal¶sudo gem install cocoapods

Step 1: Create a React Native app¶

For this tutorial, we will be using a simple React Native app. Let’s create it.

On your machine, navigate to the folder in which the app will reside and run the following command:

Terminal¶npx react-native init myApp

Run the app:

Terminal¶cd myApp npx react-native run-ios

Step 2: Integrate the app with Alan AI¶

Now we will add the Alan AI agentic interface to our app. First, we will install the Alan AI React Native plugin:

In the app folder, run the following command:

Terminal¶npm i @alan-ai/alan-sdk-react-native --save

Open the

App.jsfile. At the top of the file, add the import statement:App.js¶import { AlanView } from '@alan-ai/alan-sdk-react-native';

To the function component, add the Alan AI agentic interface:

App.js¶const App: () => Node = () => { return ( <SafeAreaView style={backgroundStyle}> <StatusBar barStyle={isDarkMode ? 'light-content' : 'dark-content'} /> <ScrollView contentInsetAdjustmentBehavior="automatic" style={backgroundStyle}> <Header /> <View style={{ backgroundColor: isDarkMode ? Colors.black : Colors.white, }}> </View> </ScrollView> // Adding the Alan AI agentic interface <View><AlanView projectid={''}/></View> </SafeAreaView> ); };

In

projectid, specify the Alan AI SDK key for your Alan AI Studio project. To get the key, in Alan AI Studio, at the top of the code editor, click Integrations and copy the value from the Alan SDK Key field.App.js¶const App: () => Node = () => { return ( <SafeAreaView style={backgroundStyle}> <StatusBar barStyle={isDarkMode ? 'light-content' : 'dark-content'} /> <ScrollView contentInsetAdjustmentBehavior="automatic" style={backgroundStyle}> <Header /> <View style={{ backgroundColor: isDarkMode ? Colors.black : Colors.white, }}> </View> </ScrollView> // Providing the Alan AI SDK key <View><AlanView projectid={'f19e47b478189e4e7d43485dbc3cb44a2e956eca572e1d8b807a3e2338fdd0dc/stage'}/></View> </SafeAreaView> ); };

In the Terminal, navigate to the

iosfolder, open the Podfile and change the minimum iOS version to 11:Podfile¶platform :ios, '11.0'

Run the following command to install dependencies for the project:

Terminal¶pod install

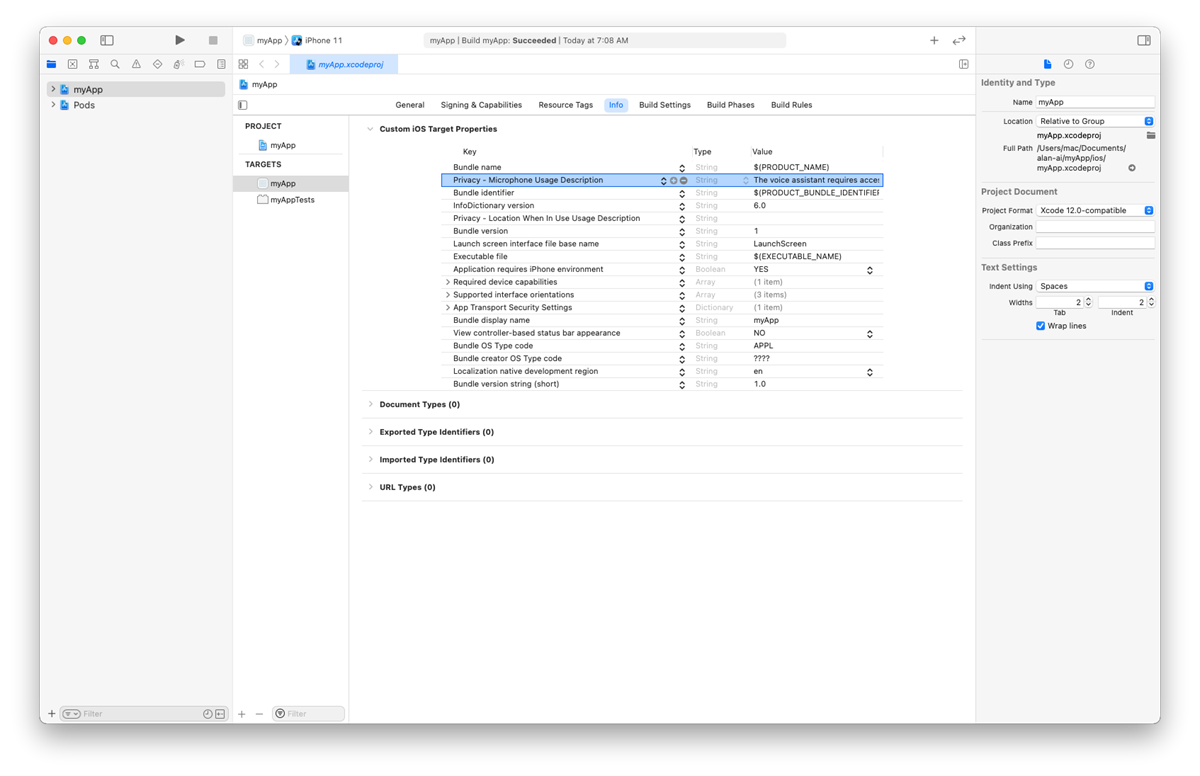

In iOS, the user must explicitly grant permission for an app to access the microphone and camera. Alan AI will use the microphone for voice interactions and camera for testing Alan AI projects on mobile. We need to add special keys with the description for the user why the app requires access to these resources.

In the

iosfolder, open the generated XCode workspace file:myApp.xcworkspace.In Xcode, go to the Info tab.

In the Custom iOS Target Properties section, hover over any key in the list and click the plus icon to the right.

From the list, select Privacy - Microphone Usage Description.

In the Value field to the right, provide a description for the added key. This description will be displayed to the user when the app is launched.

Repeat the steps above to add the Privacy - Camera Usage Description key.

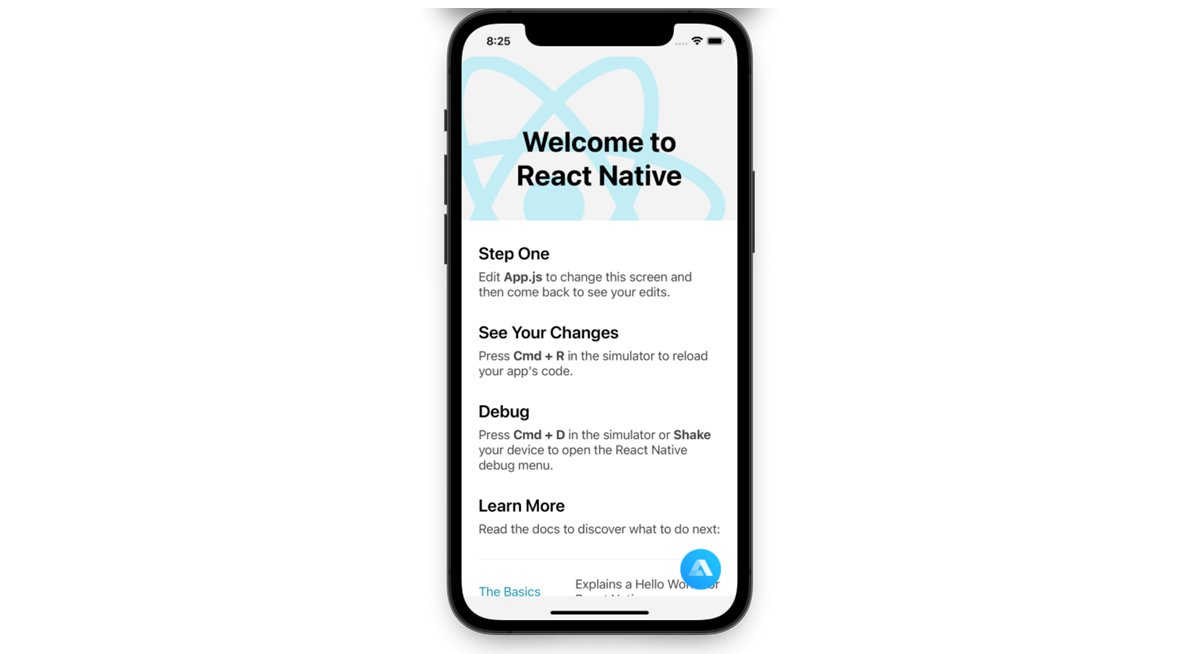

Run the app:

Terminal¶npx react-native run-ios

The app will be built and launched. When accessing the device microphone, Alan AI will display a message we have specified for the Privacy - Microphone Usage Description key.

In the bottom left corner, tap the Alan AI agentic interface and say: Hello world.

However, if you try to ask: How are you doing? The Agentic Interface will not give an appropriate response. This is because the dialog script in Alan AI Studio does not contain the necessary voice commands so far.

Step 3: Add voice commands¶

Let’s add some voice commands so that we can interact with Alan AI. In Alan AI Studio, open the project and in the code editor, add the following intents:

intent('What is your name?', p => {

p.play('It is Alan, and yours?');

});

intent('How are you doing?', p => {

p.play('Good, thank you. What about you?');

});

Now tap the Alan AI agentic interface in the app and ask: What is your name? and How are you doing? The Agentic Interface will give responses we have provided in the added intents.

Note

After integration, you may get a warning related to the onButton state. You can safely ignore it: the warning will be removed as soon as you add the onCommand handler to your app. For details, see the next tutorial: Sending commands to the app.

What’s next?¶

You can now proceed to building a voice interface with Alan AI. Here are some helpful resources:

Have a look at the next tutorial: Sending commands to the app.

Go to Dialog script concepts to learn about Alan AI concepts and functionality you can use to create a dialog script.

In Alan AI Git, get the React Native example app. Use this app to see how integration for React Native apps can be implemented.