Passing the app state to the dialog script (iOS)¶

When the user interacts with an iOS app, it may be necessary to send some data from the app to the dialog script. For example, you may need to provide the dialog script with information about the current app state.

In Alan AI, you can send data from the app to the dialog script in the following ways:

With visual state

With project API

In this tutorial, we will work with an iOS app with two views. We will send information about the current view to the dialog script with the help of the visual state. On the script side, we will use the passed state to do the following:

Create a voice command that will let Alan AI reply differently depending on the view open in the app

Create a command that can be matched only if the necessary view is open

What you will learn¶

How to pass the app state from an iOS app to the dialog script with the visual state

How to access the data passed with the visual state in the dialog script

How to filter intents by the app state

What you will need¶

For this tutorial, we will continue using the starter iOS app created in the previous tutorials. You can also use an example app provided by Alan AI: SwiftTutorial.part2.zip. This is an XCode project of the app with two views already integrated with Alan AI.

Step 1: Send the app state to the dialog script¶

To send data from the app to the dialog script, you can use Alan AI’s visual state object. This method can be helpful, in particular, if the dialog flow in your app depends on the app state. In this case, you need to know the app state on the script side to adjust the dialog logic. For example, you may want to use the app state to filter out some voice commands, give responses applicable to the current app state and so on.

The visual state allows you to send an arbitrary JSON object from the app to the script. You can access the passed data in the script through the p.visual runtime variable.

To send the visual state to the dialog script, let’s do the following:

In Xcode, open the

ViewController.swiftfile and to theViewControllerclass, add the following function:ViewController.swift¶class ViewController: UINavigationController { func setVisualState(state: [AnyHashable: Any]) { self.button.setVisualState(state) } }

In this function, we call the setVisualState() method of the Alan AI agentic interface and pass

statein it.In the project tree in Xcode, Control click the app folder and select New File. In the Source section, select Cocoa Touch Class and click Next. Name the class, for example,

FirstViewControllerand click Next. Then click Create. A new file namedFirstViewController.swiftwill be added to your app folder.Open the created file and update its content to the following:

FirstViewController.swift¶import UIKit class FirstViewController: UIViewController { override func viewDidLoad() { super.viewDidLoad() } /// Once the view is on the screen - send an updated visual state to Alan AI override func viewDidAppear(_ animated: Bool) { super.viewDidAppear(animated) /// Get the view controller with the Alan AI agentic interface if let rootVC = self.view.window?.rootViewController as? ViewController { /// Send the visual state via the Alan AI agentic interface rootVC.setVisualState(state: ["screen": "first"]) } } }

In the same way, create one more file and name it

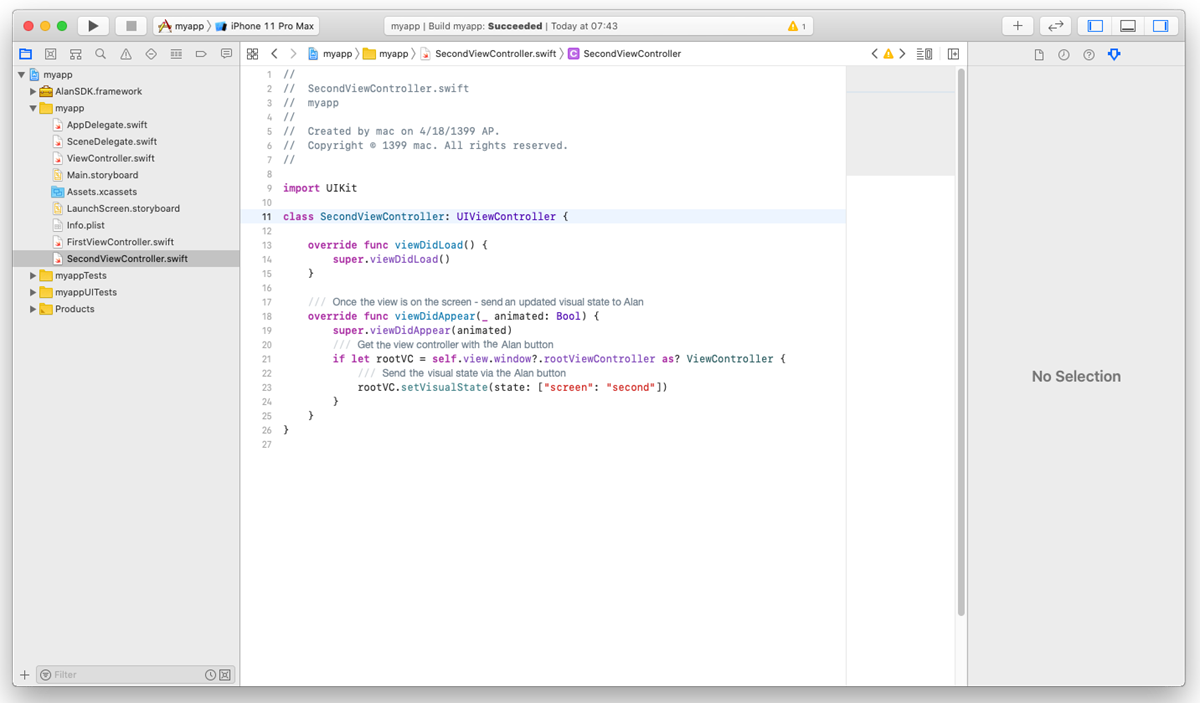

SecondViewController. The content of the file should be the following:SecondViewController.swift¶import UIKit class SecondViewController: UIViewController { override func viewDidLoad() { super.viewDidLoad() } /// Once the view is on the screen - send an updated visual state to Alan AI override func viewDidAppear(_ animated: Bool) { super.viewDidAppear(animated) /// Get the view controller with the Alan AI agentic interface if let rootVC = self.view.window?.rootViewController as? ViewController { /// Send the visual state via the Alan AI agentic interface rootVC.setVisualState(state: ["screen": "second"]) } } }

Here is what our project should look like:

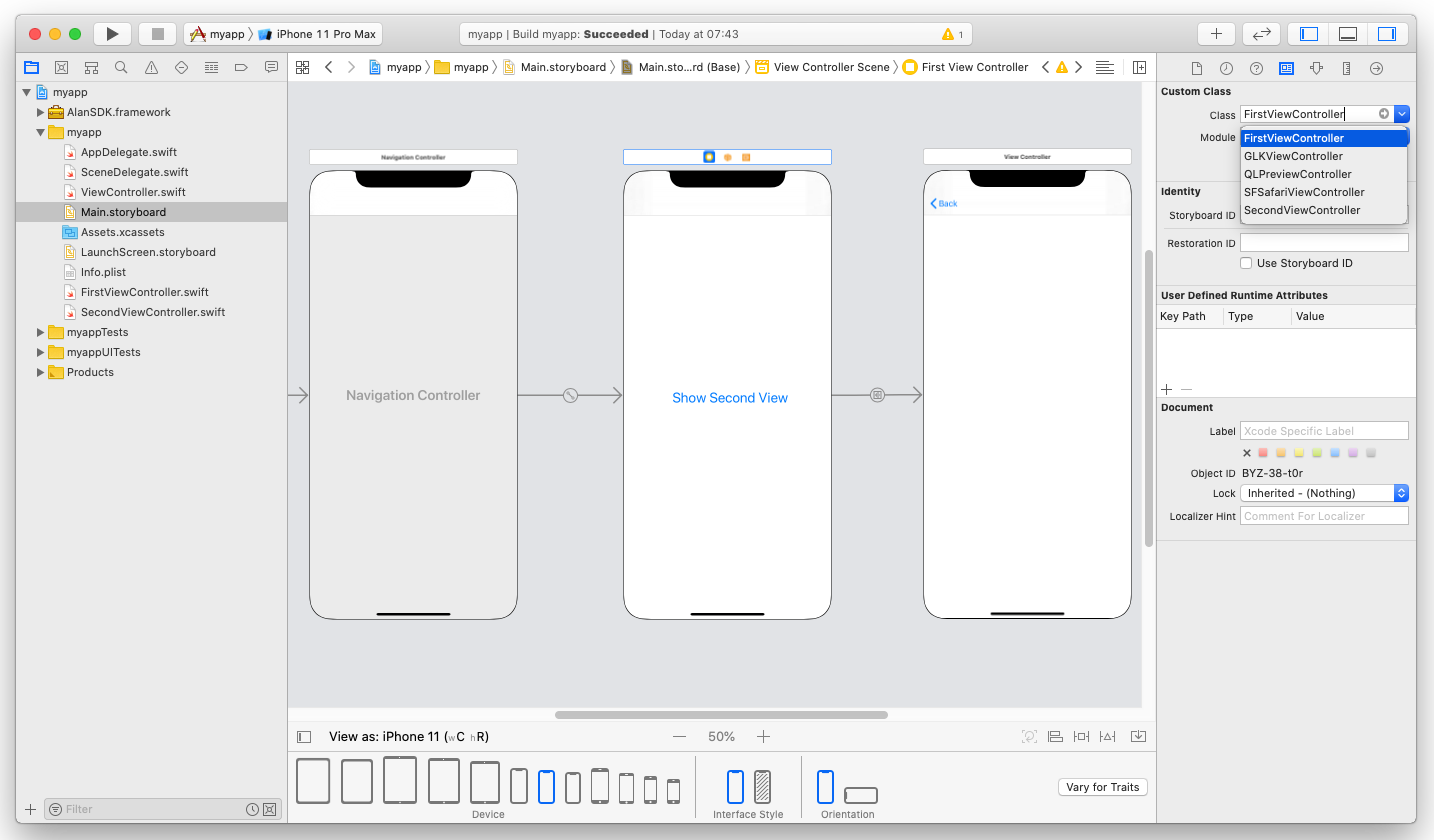

Open the app’s storyboard:

Main.storyboard.In the storyboard, select the first View Controller.

In the right pane, click the Identity Inspector icon.

In the Custom Class section, from the Class list, select

FirstViewController.

Repeat steps 6-8 for the second Controller to connect it to the

SecondViewControllerclass.

In the procedure above, we created two classes: FirstViewController and SecondViewController and connected them to the first View Controller and second View Controller, respectively. Here is how our app works now: when the first View Controller appears on the device screen, the setVisualState() function is invoked and the ["screen": "first"] JSON object is passed to the dialog script. In a similar manner, when the second View Controller appears on the device screen, the ["screen": "second"] JSON object is passed to the dialog script.

Step 2: Add a voice command with different answers for views¶

Imagine we want to provide the user with a possibility to ask: What view is this? in the app, and the Agentic Interface must reply differently depending on the view open. Let’s go back to Alan AI Studio and add a new

intent:

intent(`What view is this?`, p => {

let screen = p.visual.screen;

switch (screen) {

case "first":

p.play(`This is the initial view`);

break;

case "second":

p.play(`This is the second view`);

break;

default:

p.play(`This is an example iOS app by Alan`);

}

});

Here we use the p.visual.screen variable to access data passed with the visual state. Depending on the variable value, the Agentic Interface plays back different responses.

You can test it: run the app on the simulator and ask: What view is this? the Agentic Interface will get back with

This is the initial view. Then say Navigate forward and ask: What view is this? The Agentic Interface will answer: This is the second view.

Step 3: Create a view-specific command¶

If an app has several views, it may be necessary to create voice commands that will work for specific views only. In Alan AI, you can create view-specific commands with the help of filters added to intents. The filter in an intent defines conditions in which the intent can be matched. In our case, we can use information about the open view as the filter.

Let’s modify the intent for getting back to the initial view. This command must work only if the second view is open; we cannot say Go back when at the initial view. In Alan AI Studio, update this intent to the following:

const vScreen = visual({"screen": "second"});

intent(vScreen, "Go back", p => {

p.play({command: 'navigation', route: 'back'});

p.play(`Going back`);

});

The filter comes as the first parameter in the intent. Now this intent will be matched only if the second view is open and the visual state is {"screen": "second"}.

You can test it: run the app on the simulator, tap the Alan AI agentic interface and say: Navigate forward. Then say: Go back. The initial view will be displayed. When at the initial view, try saying this command again. Alan AI will not be able to match this intent.

What you finally get¶

After you pass through this tutorial, you will have an iOS app with two views and will be able to send view-specific commands. You can get an example of such an app from the Alan AI GitHub to make sure you have set up your app correctly.

SwiftTutorial.part3.zip: XCode project of the app

SwiftTutorial.part3.js: voice commands used for this tutorial

What’s next?¶

Have a look at the next tutorial: Highlighting items with voice.