Building a voice agent for an iOS Swift app¶

With Alan AI SDK for iOS, you can create a voice agent or chatbot like Siri and embed it to your iOS Swift app. The Alan AI Platform provides you with all the tools and leverages the industry’s best Automatic Speech Recognition (ASR), Spoken Language Understanding (SLU) and Speech Synthesis technologies to quickly build an AI agent from scratch.

In this tutorial, we will create a single view iOS app with Alan AI voice and test drive it on a simulator. The app users will be able to tap the voice agent button and give custom voice commands, and Alan AI will reply to them.

What you will learn¶

How to add a voice interface to an iOS Swift app

How to write simple voice commands for an iOS Swift app

What you will need¶

To go through this tutorial, make sure the following prerequisites are met:

You have signed up to Alan AI Studio.

You have created a project in Alan AI Studio.

You have set up Xcode on your computer.

Step 1: Create a single view iOS app¶

For this tutorial, we will be using a simple iOS app with a single view. To create the app:

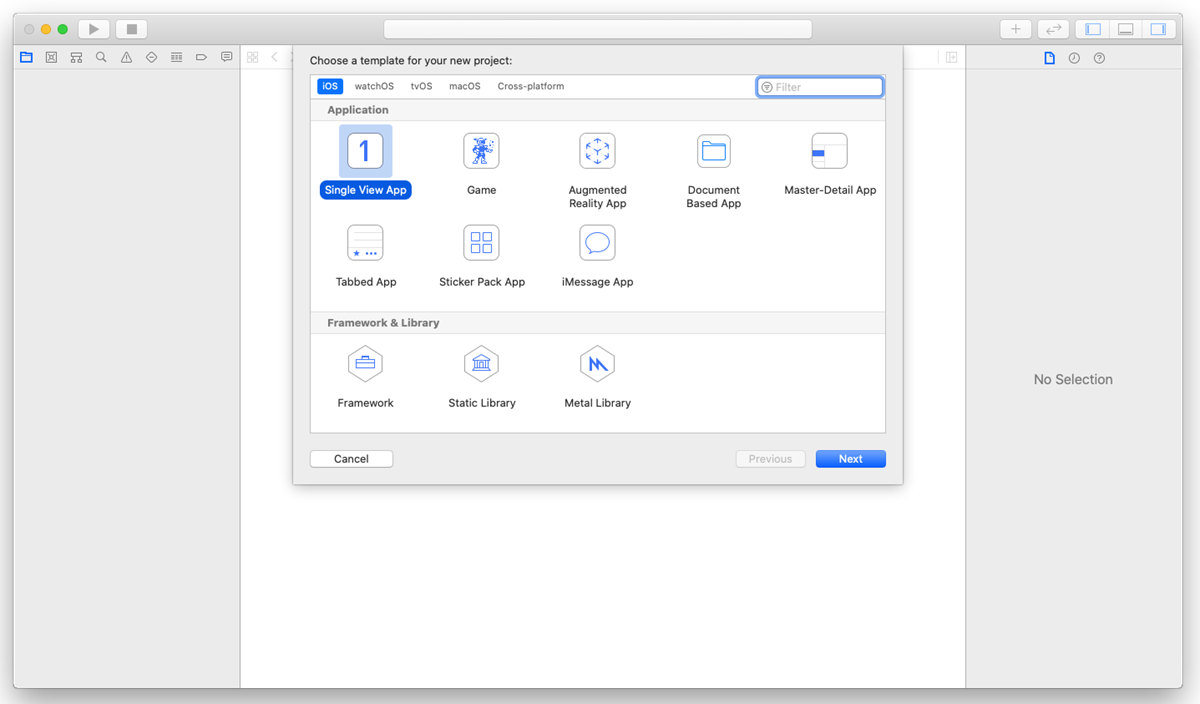

Open Xcode and select to create a new Xcode project.

Select Single View App.

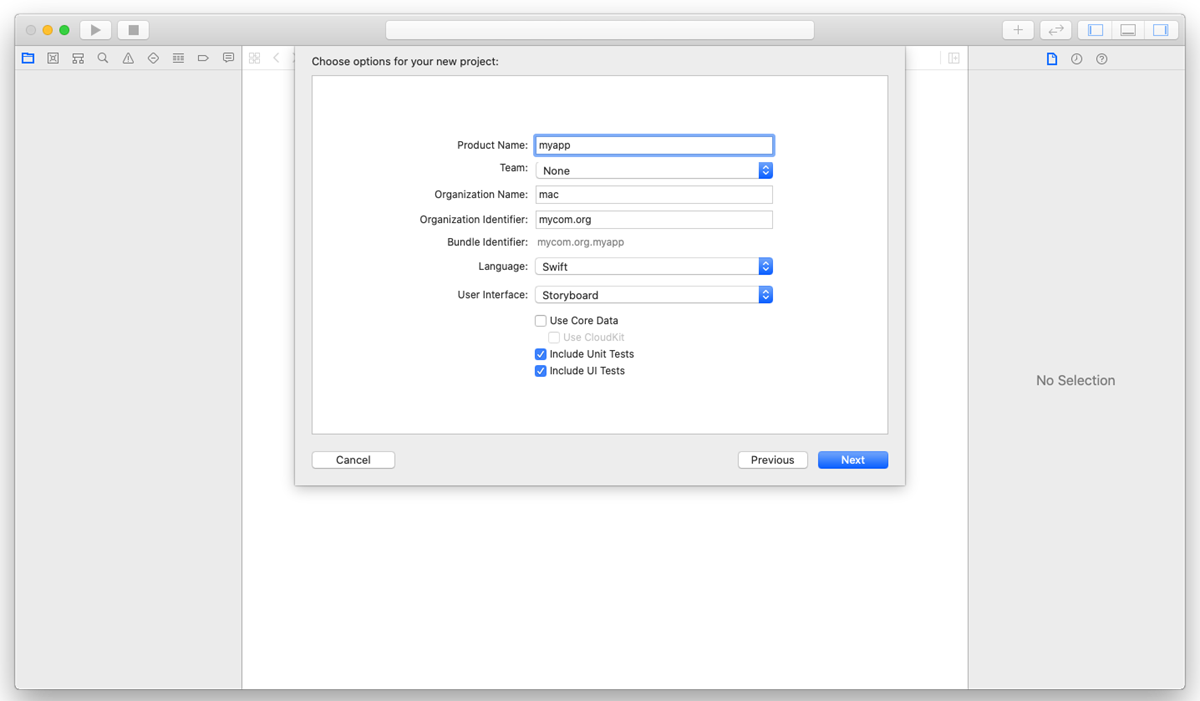

In the Product Name field, specify the project name.

From the Language list, select Swift.

From the User Interface list, select Storyboard.

Select a folder in which the project will reside and click Create.

Step 2: Add the Alan AI SDK for iOS to the project¶

The next step is to add the Alan AI SDK for iOS to the app project. There are two ways to do it:

with CocoaPods

manually

Let’s add the SDK manually:

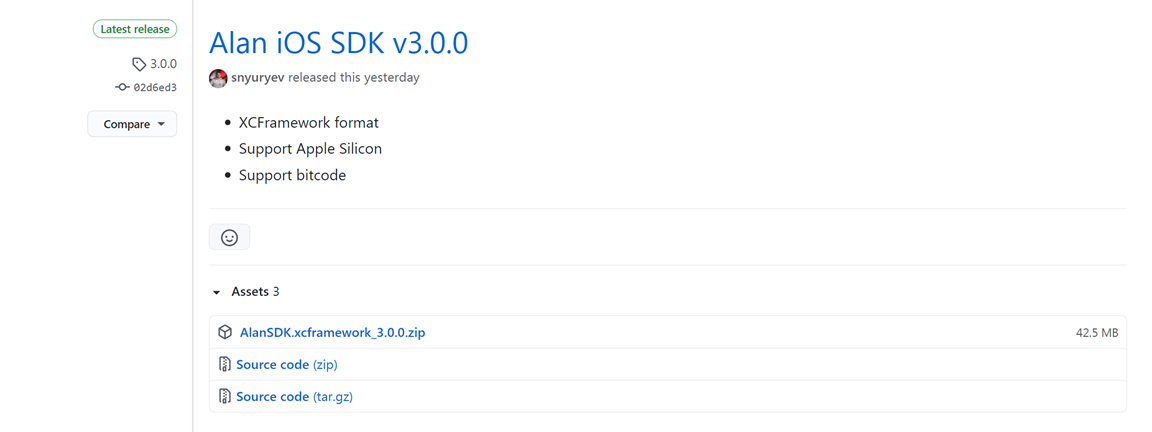

On Alan AI GitHub, go to the Releases page for the Alan AI SDK for iOS: https://github.com/alan-ai/alan-sdk-ios/releases.

Download the

AlanSDK.xcframework_<x.x.x>.zipfile from the latest release.

On the computer, extract

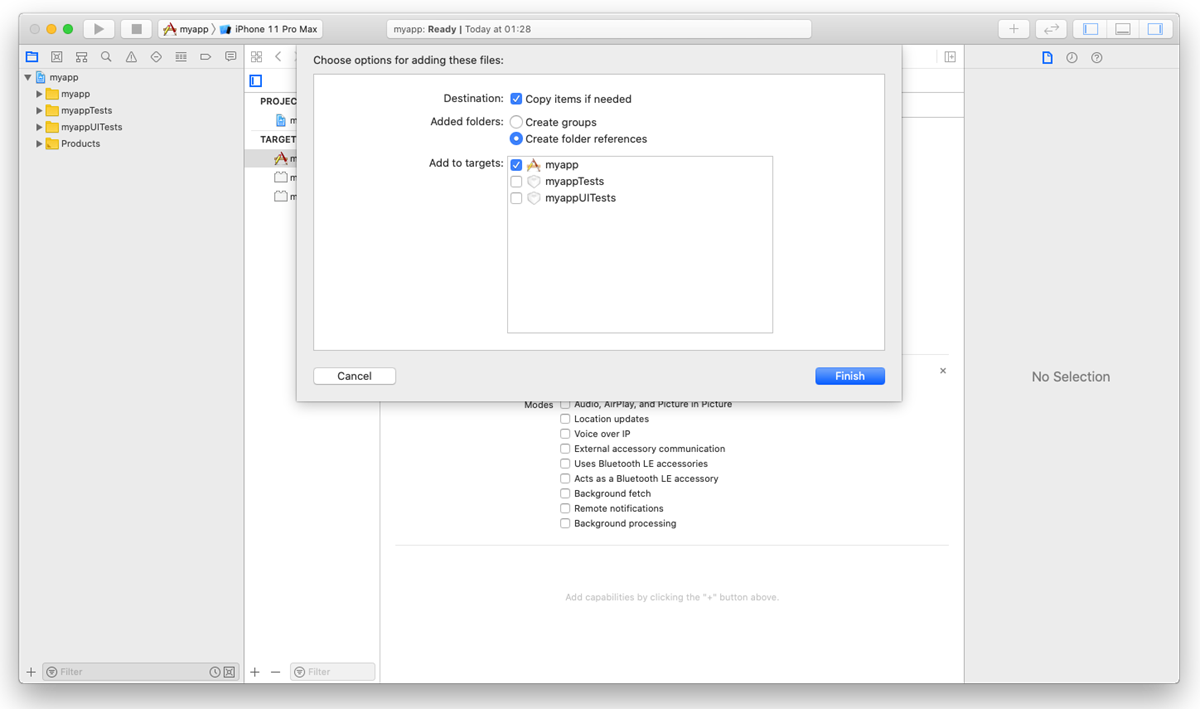

AlanSDK.xcframeworkfrom the ZIP archive.Drag

AlanSDK.xcframeworkand drop it under the root node of the Xcode projectIn the displayed window, select the Copy items if needed check box and click Finish.

Step 3: Specify the Xcode project settings¶

Now we need to adjust the project settings to use the Alan AI SDK for iOS.

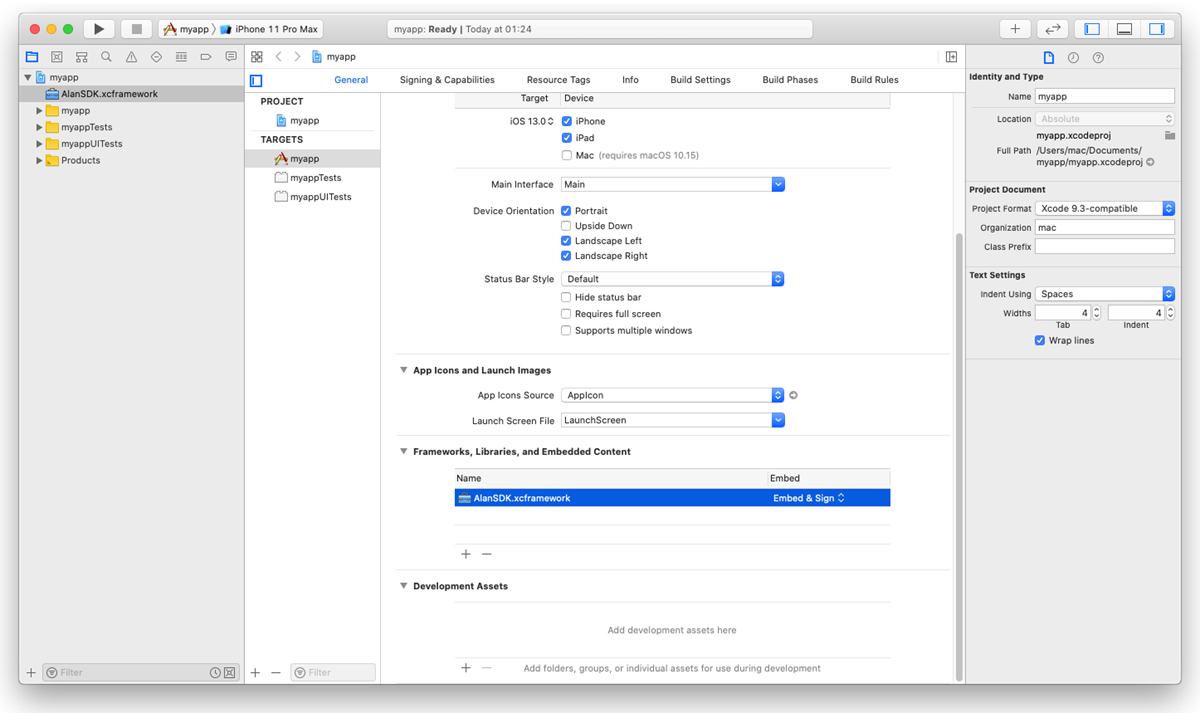

We need to make sure the Alan SDK for iOS is embedded when the app gets built. On the General tab, scroll down to the Frameworks, Libraries, and Embedded Content section. To the right of

AlanSDK.xcframework, select Embed and Sign.

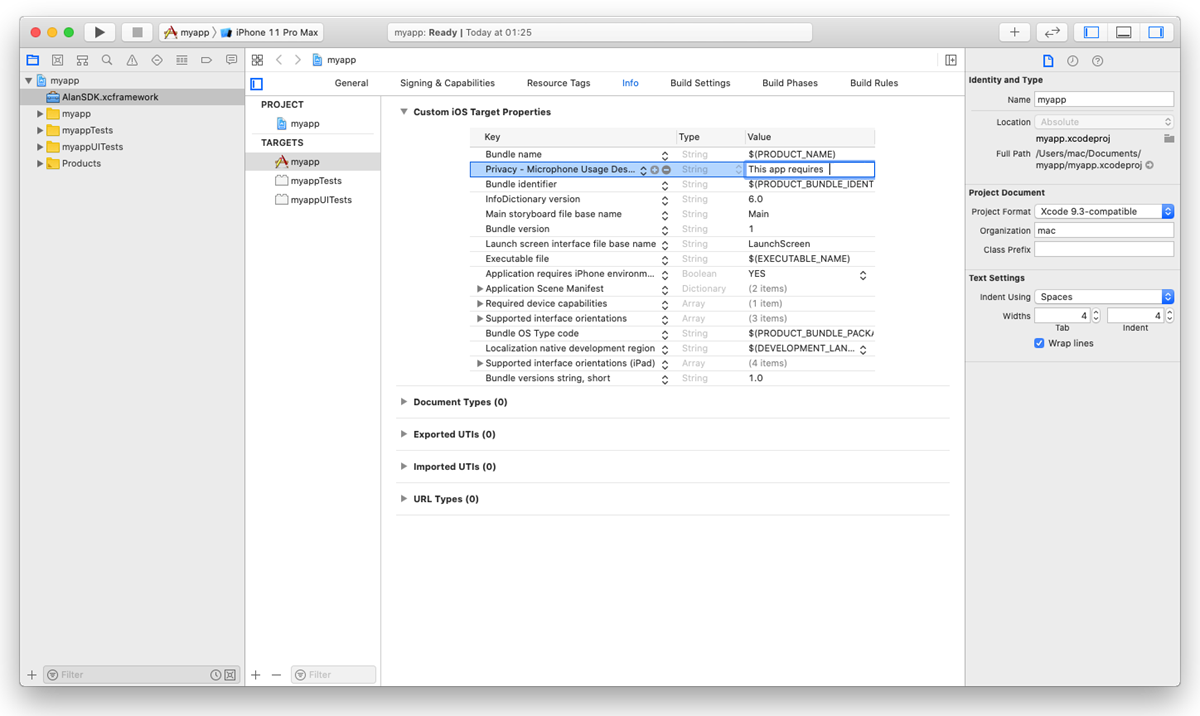

In iOS, the user must explicitly grant permission for an app to access the microphone. In the Xcode project, we need to add a special key with the description for the user why the app requires access to the microphone.

Go to the Info tab.

In the Custom iOS Target Properties section, hover over any key in the list and click the plus icon to the right.

From the list, select Privacy - Microphone Usage Description.

In the Value field to the right, provide a description for the added key. This description will be displayed to the user when the app is launched.

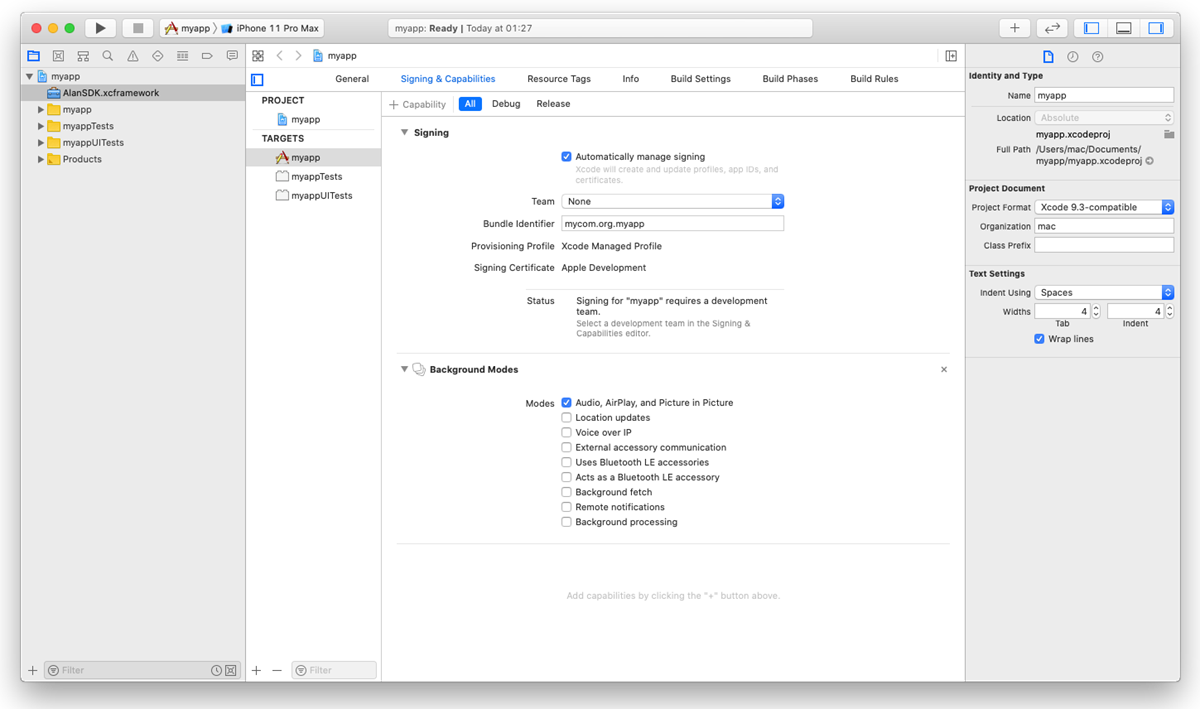

We need to allow the background mode for our app. Go to the Signing and Capabilities tab. In the top left corner, click +Capability and in the capabilities list, double-click Background Modes. In the Modes list, select the Audio, AirPlay, and Picture in Picture check box.

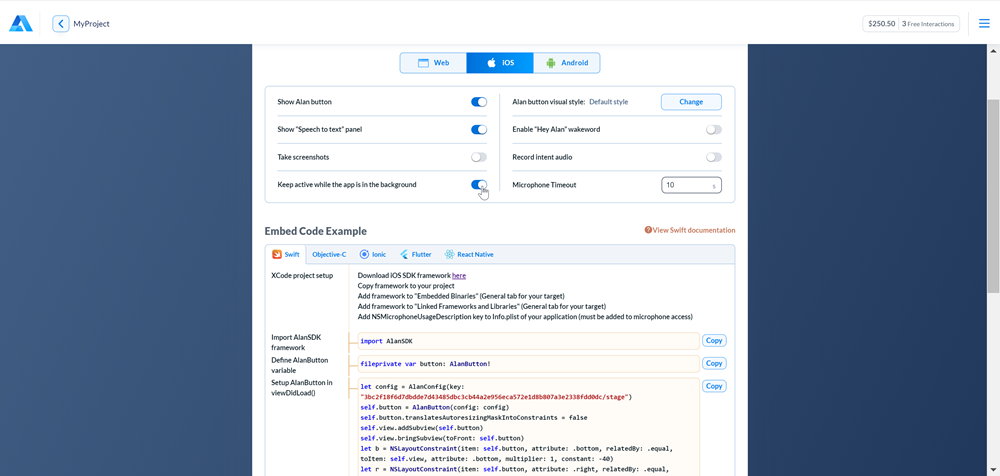

We also need to make sure the background mode is enabled in our Alan AI project. In Alan AI Studio, at the top of the code editor, click Integrations, go to the iOS tab and enable the Keep active while the app is in the background option.

Step 4: Integrate Alan AI with Swift¶

The next step is to update our app to import the Alan AI SDK for iOS and add the Alan AI button to it.

In the app folder, open the

ViewController.swiftfile.At the top of the file, import the Alan AI SDK for iOS:

ViewController.swift¶import AlanSDK

In the

ViewControllerclass, define variables for the Alan AI button and Alan AI text panel:ViewController.swift¶class ViewController: UINavigationController { /// Alan AI button fileprivate var button: AlanButton! /// Alan AI text panel fileprivate var text: AlanText! }

To the

UIViewControllerclass, add thesetupAlan()function. Here we set up the Alan AI button and Alan AI text panel and position them on the view:ViewController.swift¶class ViewController: UINavigationController { fileprivate func setupAlan() { /// Define the project key let config = AlanConfig(key: "") /// Init the Alan AI button self.button = AlanButton(config: config) /// Init the Alan AI text panel self.text = AlanText(frame: CGRect.zero) /// Add the button and text panel to the window self.view.addSubview(self.button) self.button.translatesAutoresizingMaskIntoConstraints = false self.view.addSubview(self.text) self.text.translatesAutoresizingMaskIntoConstraints = false /// Align the button and text panel on the window let views = ["button" : self.button!, "text" : self.text!] let verticalButton = NSLayoutConstraint.constraints(withVisualFormat: "V:|-(>=0@299)-[button(64)]-40-|", options: NSLayoutConstraint.FormatOptions(), metrics: nil, views: views) let verticalText = NSLayoutConstraint.constraints(withVisualFormat: "V:|-(>=0@299)-[text(64)]-40-|", options: NSLayoutConstraint.FormatOptions(), metrics: nil, views: views) let horizontalButton = NSLayoutConstraint.constraints(withVisualFormat: "H:|-(>=0@299)-[button(64)]-20-|", options: NSLayoutConstraint.FormatOptions(), metrics: nil, views: views) let horizontalText = NSLayoutConstraint.constraints(withVisualFormat: "H:|-20-[text]-20-|", options: NSLayoutConstraint.FormatOptions(), metrics: nil, views: views) self.view.addConstraints(verticalButton + verticalText + horizontalButton + horizontalText) } }

Now, in

let config = AlanConfig(key: ""), define the Alan AI SDK key for the Alan Studio project. To get the key, in Alan AI Studio, at the top of the code editor, click Integrations and copy the value from the Alan SDK Key field. Then insert the key in the Xcode project.In

ViewDidLoad(), call thesetupAlan()function:ViewController.swift¶class ViewController: UINavigationController { override func viewDidLoad() { self.setupAlan() } }

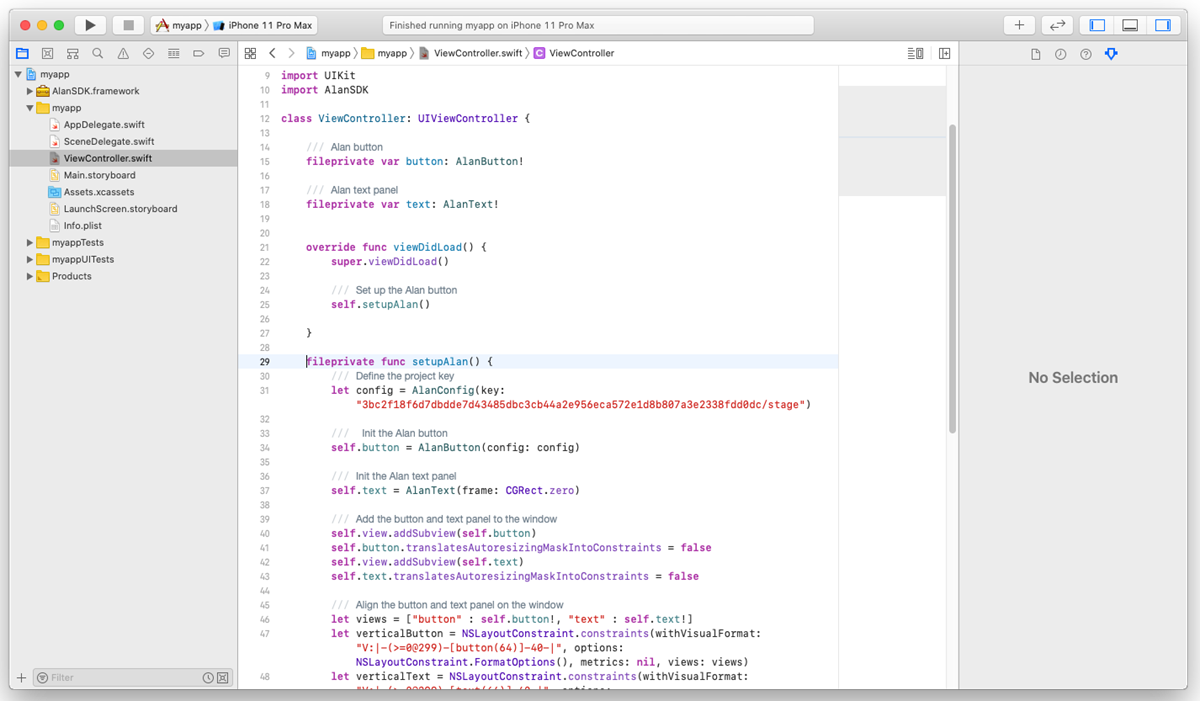

Here is what our Xcode project should look like:

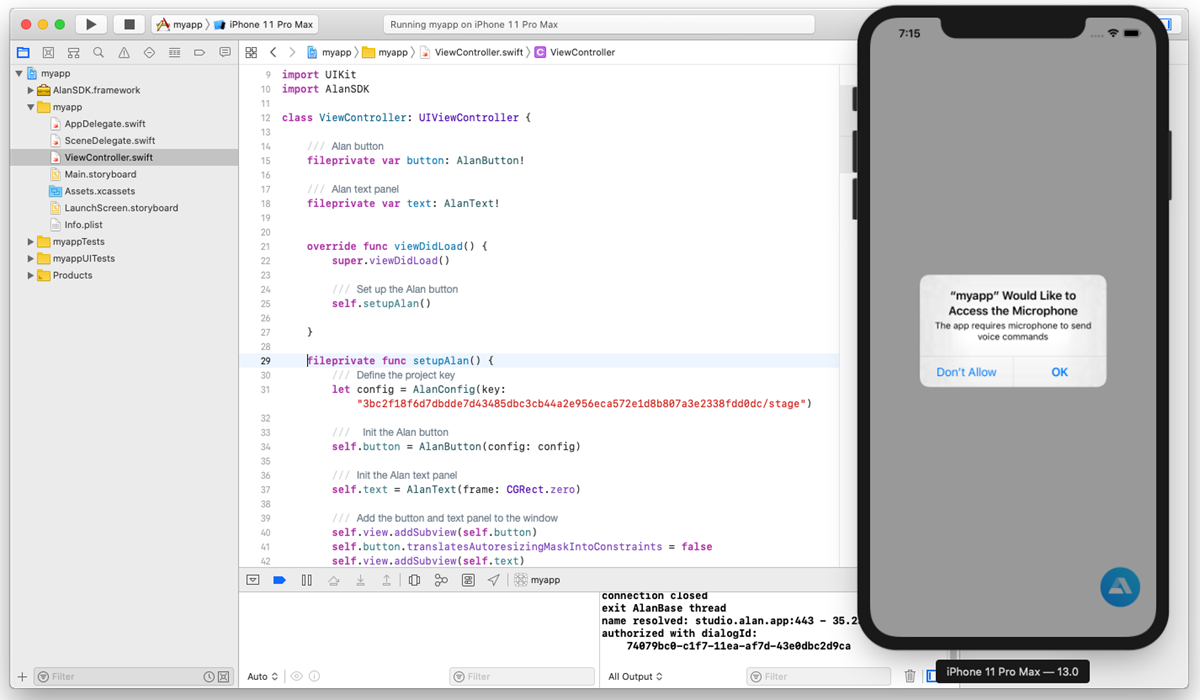

Run the app. Here is our app running on the simulator. The app displays an alert to get microphone access with the description we have provided:

Step 5: Add voice commands¶

Let’s add some voice commands so that we can interact with Alan AI. In Alan AI Studio, open the project and in the code editor, add the following intents:

intent(`What is your name?`, p => {

p.play(`It's Alan, and yours?`);

});

intent(`How are you doing?`, p => {

p.play(`Good, thank you. What about you?`);

});

In the app, tap the Alan AI button and ask: What is your name? and How are you doing? The AI agent will give responses we have provided in the added intents.

What you finally get¶

After you pass through this tutorial, you will have a single view iOS app integrated with Alan AI. You can get an example of such an app from the Alan AI GitHub to make sure you have set up your app correctly.

SwiftTutorial.part1.zip: XCode project of the app

SwiftTutorial.part1.js: voice commands used for this tutorial

What’s next?¶

You can now proceed to building a voice interface with Alan AI. Here are some helpful resources:

Have a look at the next tutorial: Navigating between views in an iOS Swift app.

Go to Dialog script concepts to learn about Alan AI concepts and functionality you can use to create a dialog script.

In Alan AI Git, get the iOS Swift example app. Use this example app to see how integration for iOS apps can be implemented.