Alan AI React Native Framework¶

Available on: Android iOS

Integrating with Alan AI¶

To integrate a React Native app with Alan AI, you need to do the following:

Step 1. Set up the environment¶

Before you start integrating a React Native app with Alan AI, make sure all necessary tools are installed on your computer.

Step 2. Install the Alan AI React Native plugin¶

To add the Alan AI React Native plugin to your app:

Step 3. Add the Alan AI agentic interface to the app¶

Once the plugin is installed, you need to add the Alan AI agentic interface to your React Native app.

Add the Alan AI agentic interface and Alan AI text panel to the app. In the app folder, open

App.jsand add the following import statement at the top of the file:App.js¶import { AlanView } from '@alan-ai/alan-sdk-react-native';

In the

App.jsfile, add a view with the Alan AI agentic interface and Alan AI text panel:App.js¶return ( <AlanView projectid={ '' } host={'v1.alan.app'} /> );

In

projectID, specify the Alan AI SDK key for your Alan AI Studio project. To get the key, in Alan AI Studio, at the top of the code editor, click Integrations and copy the value from the Alan SDK Key field.App.js¶return ( <AlanView projectid={ 'cc2b0aa23e5f90d2974f1bf6b6929c1b2e956eca572e1d8b807a3e2338fdd0dc/prod' } host={'v1.alan.app'} /> );

You need to add a listener for events that will be coming from the dialog script. To start listening for events, in

App.js, add the following import statement:App.js¶import { NativeEventEmitter, NativeModules } from 'react-native';

In

App.js, create a newNativeEventEmitterobject:App.js¶const { AlanManager, AlanEventEmitter } = NativeModules; const alanEventEmitter = new NativeEventEmitter(AlanEventEmitter);

And subscribe to the dialog script events:

App.js¶const subscription = alanEventEmitter.addListener('command', (data) => { console.log(`got command event ${JSON.stringify(data)}`); });

Do not forget to remove the listener in your

App()class:App.js¶componentWillUnmount() { subscription.remove(); }

Note

Regularly update the Alan AI package your project depends on. To check if a newer version is available, run npm outdated. To update the package, run npm update @alan-ai/alan-sdk-react-native. For more details, see npm documentation.

Running the app¶

After you have integrated your app with Alan AI, you can build and deploy your project as a native iOS or Android app.

Running on iOS¶

To run your app integrated with Alan AI on the iOS platform, you need to update the app settings for iOS.

In the Terminal, navigate to the

iosfolder in your app:Terminal¶cd ios

In the

iosfolder, open the Podfile and change the minimum iOS version to 11:Podfile¶platform :ios, '11.0'

In the Terminal, run the following command to install dependencies for the project:

Terminal¶pod install

In the

iosfolder, open the generated XCode workspace file:<appname>.xcworkspace. You should use this file to open your Xcode project from now on.In iOS, the user must explicitly grant permission for an app to access the user’s data and resources. An app with the Alan AI agentic interface requires access to:

User’s device microphone for voice interactions

User’s device camera for testing Alan AI projects on mobile

To comply with this requirement, you must add

NSMicrophoneUsageDescriptionandNSCameraUsageDescriptionkeys to theInfo.plistfile of your app and provide a message why your app requires access to the microphone and camera. The message will be displayed only when Alan AI needs to activate the microphone or camera.To add the keys:

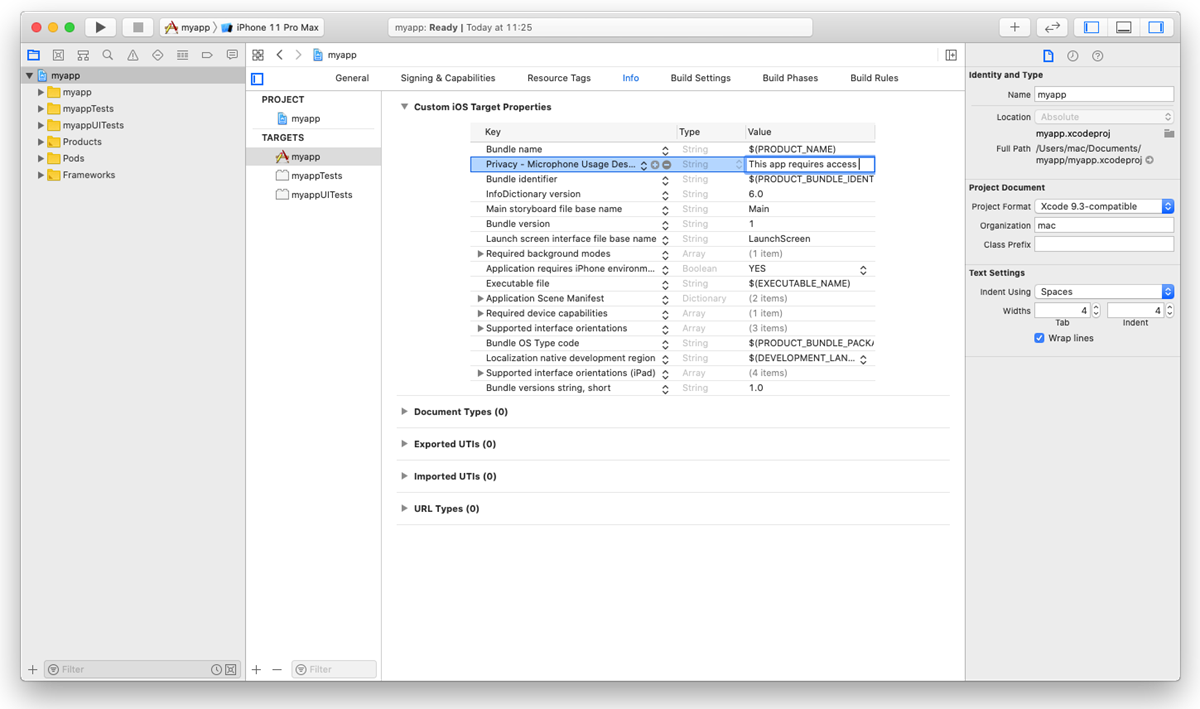

In Xcode, go to the Info tab.

In the Custom iOS Target Properties section, hover over any key in the list and click the plus icon to the right.

From the list, select Privacy - Microphone Usage Description.

In the Value field to the right, provide a description for the added key. This description will be displayed to the user when the app is launched.

Repeat the steps above to add the Privacy - Camera Usage Description key.

In the Terminal, navigate to the app folder, up one folder level:

Terminal¶cd ..

To run your app on the iOS platform, use one of the following command:

Terminal¶react-native run-ios yarn ios

You can also open the <appname>.xcworkspace file in XCode and test drive the app on the simulator or device.

Running on Android¶

To run your React Native app integrated with Alan AI on the Android platform:

Make sure the correct minimum SDK version is set for your app:

minSdkVersion 21. To check the version, open the/android/app/build.gradlefile, underdefaultConfig, locateminSdkVersionand update its value if necessary.To run your app on the Android platform, use one of the following command:

Terminal¶react-native run-android yarn android

You can also launch the app from the Android Studio: open <app>/android in the IDE and run the app in a usual way.

Running in the Debug mode¶

You can run your app on iOS or Android in the debug mode. The debug mode allows you to hot reload the app on the device or simulator as soon as you update anything in the app.

To run the app in the debug mode, make sure the Metro bundler is started. To start the Metro bundler, in the Terminal, run the following command:

react-native start

The Terminal window with the Metro bundler will be launched. You can then run your app as usual with the following commands:

For iOS:

Terminal¶react-native run-ios yarn ios

For Android:

Terminal¶react-native run-android yarn android

Note

After integration, you may get a warning related to the onButton state. You can safely ignore it: the warning will be removed as soon as you add the onCommand handler to your app.

Specifying the Alan AI agentic interface parameters¶

You can specify the following parameters for the Alan AI agentic interface added to your app:

Name |

Type |

Description |

|---|---|---|

|

string |

The Alan AI SDK key for a project in Alan AI Studio. |

|

JSON object |

The authentication or configuration data to be sent to the dialog script. For details, see authData. |

Using client API methods¶

You can use the following client API methods in your app:

setVisualState()¶

Use the setVisualState() method to inform the Agentic Interface about the app’s visual context. For details, see setVisualState().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

setVisualState() {

/// Providing any params with json

AlanManager.setVisualState({"data":"your data"});

}

callProjectApi()¶

Use the callProjectApi() method to send data from the client app to the dialog script and trigger activities without voice and text commands. For details, see callProjectApi().

projectAPI.setClientData = function(p, param, callback) {

console.log(param);

};

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

callProjectApi() {

/// Providing any params with json

AlanManager.callProjectApi(

'script::setClientData', {"data":"your data"},

(error, result) => {

if (error) {

console.error(error);

} else {

console.log(result);

}

},

)

}

playText()¶

Use the playText() method to play specific text in the client app. For details, see playText().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

/// Playing any text message

playText() {

/// Providing text as string param

AlanManager.playText("Hi");

}

sendText()¶

Use the sendText() method to send a text message to Alan AI as the user’s input. For details, see sendText().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

/// Sending any text message

sendText() {

/// Providing text as string param

AlanManager.sendText("Hello Alan, can you help me?");

}

playCommand()¶

Use the playCommand() method to execute a specific command in the client app. For details, see playCommand().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

/// Executing a command locally

playCommand() {

/// Providing any params with json

AlanManager.playCommand({"action":"openSomePage"})

}

activate()¶

Use the activate() method to activate the Alan AI agentic interface programmatically. For details, see activate().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

/// Activating the Alan AI agentic interface programmatically

activate() {

AlanManager.activate();

}

deactivate()¶

Use the deactivate() method to deactivate the Alan AI agentic interface programmatically. For details, see deactivate().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

/// Deactivating the Alan AI agentic interface programmatically

deactivate() {

AlanManager.deactivate();

}

isActive()¶

Use the isActive() method to check the Alan AI agentic interface state: active or not. For details, see isActive().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

AlanManager.isActive((error, result) => {

if (error) {

console.error(error);

} else {

console.log(result);

}

})

getWakewordEnabled()¶

Use the getWakewordEnabled() method to check the state of the wake word for the Alan AI agentic interface. For details, see getWakewordEnabled().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

AlanManager.getWakewordEnabled((error, result) => {

if (error) {

console.error(error);

} else {

console.log(`getWakewordEnabled ${result}`);

}

});

setWakewordEnabled()¶

Use the setWakewordEnabled() method to enable or disable the wake word for the Alan AI agentic interface. For details, see setWakewordEnabled().

import { NativeModules } from 'react-native';

const { AlanManager } = NativeModules;

AlanManager.setWakewordEnabled(true);

Using handlers¶

You can use the following Alan AI handlers in your app:

onCommand handler¶

Use the onCommand handler to handle commands sent from the dialog script. For details, see onCommand handler.

import { NativeEventEmitter, NativeModules } from 'react-native';

const { AlanEventEmitter } = NativeModules;

const alanEventEmitter = new NativeEventEmitter(AlanEventEmitter);

componentDidMount() {

// Handle commands from Alan AI Studio

alanEventEmitter.addListener('onCommand', (data) => {

console.log(`onCommand: ${JSON.stringify(data)}`);

});

}

componentWillUnmount() {

alanEventEmitter.removeAllListeners('onCommand');

}

onButtonState handler¶

Use the onButtonState handler to capture and handle the Alan AI agentic interface state changes. For details, see onButtonState handler.

import { NativeEventEmitter, NativeModules } from 'react-native';

const { AlanEventEmitter } = NativeModules;

const alanEventEmitter = new NativeEventEmitter(AlanEventEmitter);

componentDidMount() {

/// Handle button state

alanEventEmitter.addListener('onButtonState', (state) => {

console.log(`onButtonState: ${JSON.stringify(state)}`);

});

}

componentWillUnmount() {

alanEventEmitter.removeAllListeners('onButtonState');

}

onEvent handler¶

Use the onEvent handler to capture and handle events emitted by Alan AI: get user’s utterances, Agentic Interface responses and so on. For details, see onEvent handler.

import { NativeEventEmitter, NativeModules } from 'react-native';

const { AlanEventEmitter } = NativeModules;

const alanEventEmitter = new NativeEventEmitter(AlanEventEmitter);

componentDidMount() {

/// Handle events

alanEventEmitter.addListener('onEvent', (payload) => {

console.log(`onEvent: ${JSON.stringify(payload)}`);

});

}

componentWillUnmount() {

alanEventEmitter.removeAllListeners('onEvent');

}

Troubleshooting¶

If you encounter the following error:

Execution failed for task ':app:mergeDebugNativeLibs'for files like:lib/arm64-v8a/libc++_shared.so,lib/x86/libc++_shared.so,lib/x86_64/libc++_shared.so,lib/armeabi-v7a/libc++_shared.so, open thebuild.gradlefile at theModulelevel and add packaging options:build.gradle (module level)¶android { packagingOptions { pickFirst 'lib/arm64-v8a/libc++_shared.so' pickFirst 'lib/x86/libc++_shared.so' pickFirst 'lib/x86_64/libc++_shared.so' pickFirst 'lib/armeabi-v7a/libc++_shared.so' } }

If you encounter the following error:

Execution failed for task ':alan-ai_alan-sdk-react-native:verifyReleaseResources', make sure your environment satisfies all conditions described in Setting up the development environment in React Native documentation.The minimum possible Android SDK version required by the Alan AI SDK is 21. If the version in your project is lower, you may encounter the following error:

AndroidManifest.xml Error: uses-sdk:minSdkVersion 16 cannot be smaller than version 21 declared in library [:alan_voice]. Open theapp/android/build.gradlefile, underdefaultConfig, locateminSdkVersionand change the version to 21.